iCTF 2017 Writeup: Turing Award

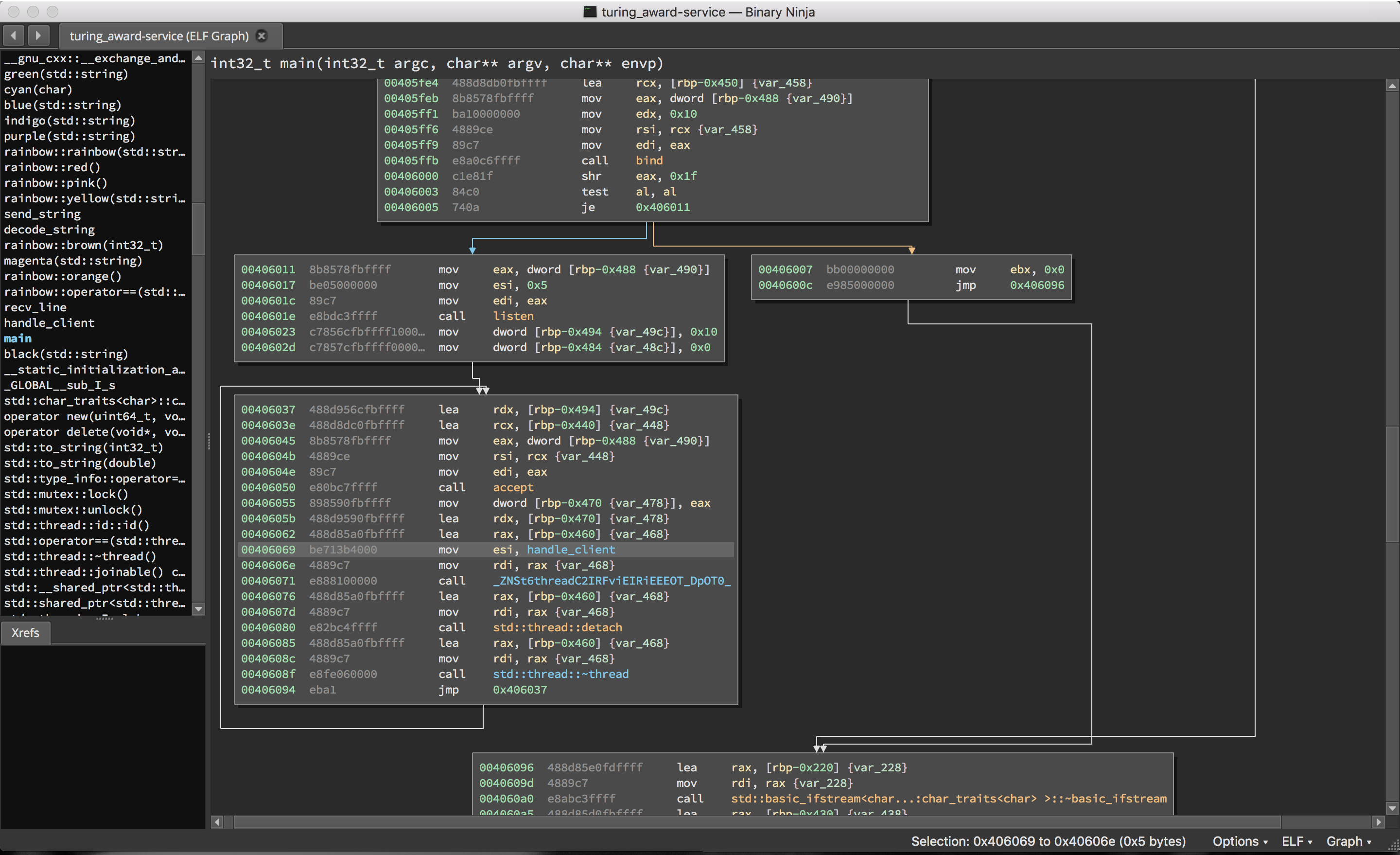

Passing the Turing TestThis year’s edition of the iCTF took place last Friday, and Northeastern fielded a team this year that placed respectably. (At least, we beat BU – sorry Manuel!) I spent most of the day helping out with turing_award, so – as is tradition – here is a writeup on our solution.

Exploring the Service

Connecting to the service presents us with a prompt to interact with a bot. Sending a bunch of data doesn’t produce any crashes, so perhaps we aren’t dealing with run-of-the-mill memory corruption.

Ncat: Version 7.40 ( https://nmap.org/ncat )

Ncat: Connected to 127.0.0.1:41414.

You: Hello

Human: I don't have a cop.

You: What cop?

Human: Here with you.

You: I don't get it.

Human: Don't seem to teach but i don't believe the middle of my head on our concern with earth.

You: exit

Human: See'ya later, bud!

Time to take a look at the binary to see what we can see.

Some immediate observations:

- Symbols!

- C++.

- This binary has a very colorful function naming scheme.

- Hmmm, where are the strings?

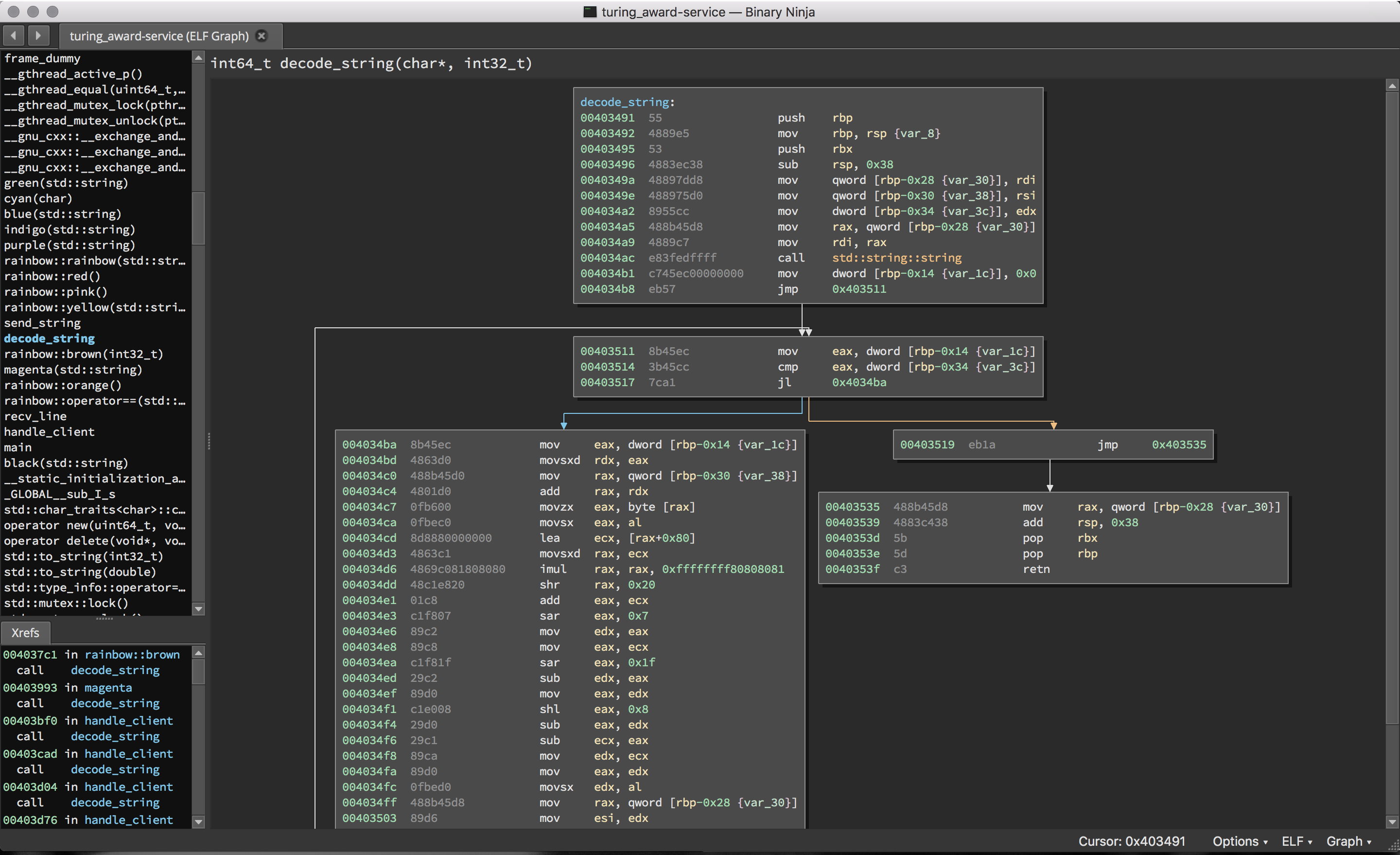

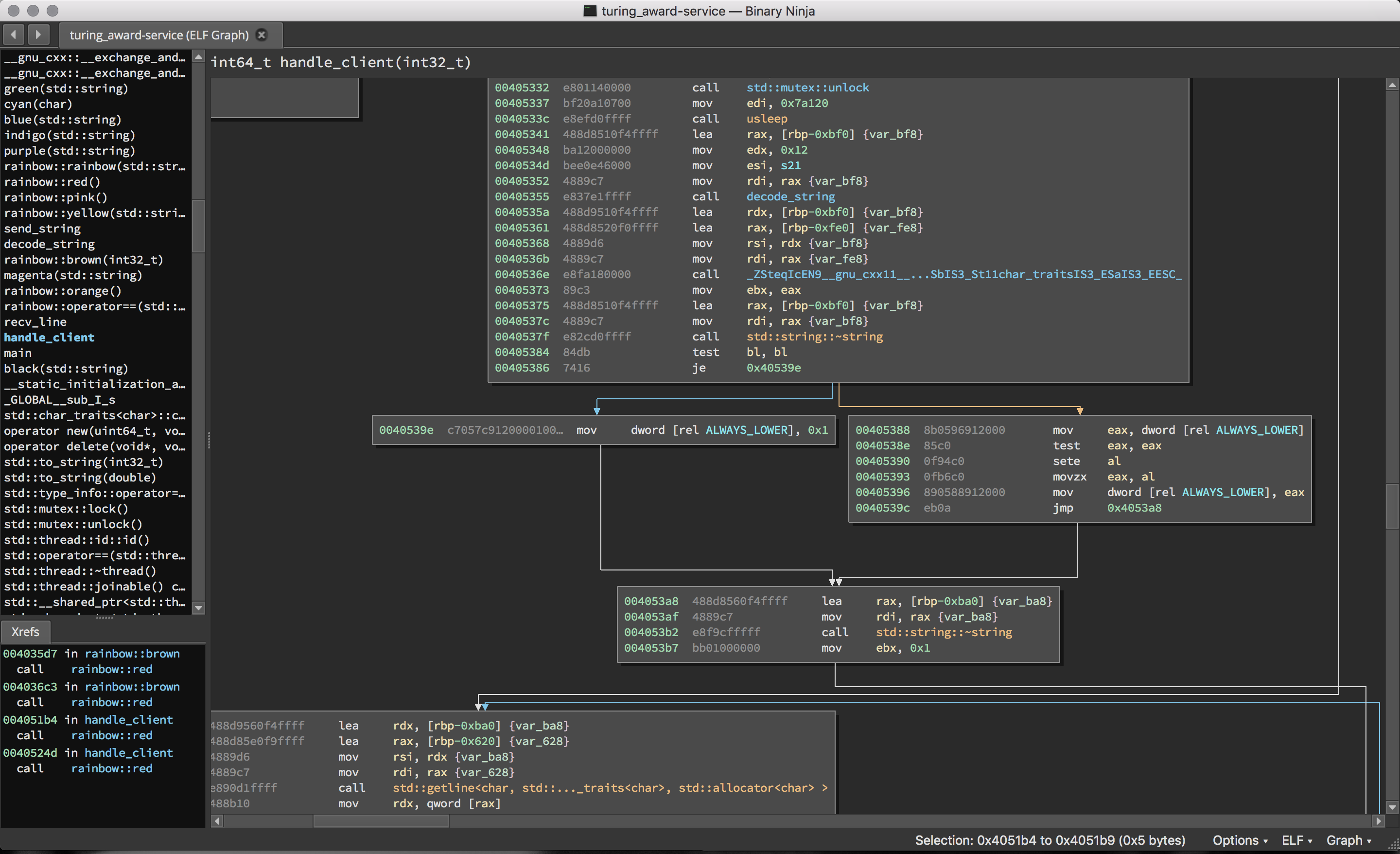

By chasing down the disassembly, it quickly becomes obvious that the strings

have been obfuscated and are being deobfuscated on demand at runtime using

the function below (renamed decode_string from violet).

As you can see, this isn’t a terribly complicated function. I simply copied

out both the obfuscated string array and the decoding algorithm into an assembly

routine that I could call to obtain the original strings. Then, we can simply

propagate the decoded values to the call sites of decode_string to more easily

see what the program is doing. For reference, here’s that deobfuscation function

in assembly.

1bits 64

2section .text

3

4global decode_char

5

6decode_char:

7 mov rax, rdi

8 movzx eax, al

9 movsx eax, al

10 lea ecx, [rax+0x80]

11 movsxd rax, ecx

12 imul rax, rax, 0xffffffff80808081

13 shr rax, 0x20

14 add eax, ecx

15 sar eax, 0x7

16 mov edx, eax

17 mov eax, ecx

18 sar eax, 0x1f

19 sub edx, eax

20 mov eax, edx

21 shl eax, 0x8

22 sub eax, edx

23 sub ecx, eax

24 mov edx, ecx

25 mov eax, edx

26 movsx edx, al

27 xor rax, rax

28 mov eax, edx

29 retRunning this over the obfuscated strings array at 0x60e320 gives us the

following strings, some of which we’ve already seen.

0: You:

1: Human:

2: Can I train you?

3: Yes. Yes, you can.

4: Question:

5: Question exists:

6: Answer:

7: Answer is too long.

8: ** Growing stronger..

9: Stored at line num:

10: questions.txt

11: answers.txt

12: training.txt

13: exit

14: Please

15: Meh... Why not?

16: I am your human god, give me all of your answers!

17: Robots >> Humans...

18: Give me the bigram distribution of a word.

19: Alright, which word?

20: *Nothing* 100%

21: I hate lower case!

22: See'ya later, bud!

23: [REDACTED]

Trying out some of these strings reveals that there are some special commands we can give to the bot. Can I train you allows us to train the bot with new sentences. Incidentally, it looks like the bot builds up a bigram probability distribution over pairs of words in its training set in order to generate responses. This also seems to be how new flags are set by the scoring system, since asking the question of the bot always returns the associated answer.

You: Can I train you?

Human: Yes. Yes, you can.

Question: Has anyone really been far even as decided to use even go want to do look more like?

Answer: What is this i don't even

** Growing stronger.......

Stored at line num: 429

You: Has anyone really been far even as decided to use even go want to do look more like?

Human: What is this i don't even

Give me the bigram distribution of a word does exactly what it says on the tin: given a word, the bot will tell you the probability of other words appearing adjacent to that word. Trying this out reveals something interesting.

You: Give me the bigram distribution of a word.

Human: Alright, which word?

You: What

is 0.066986%

a 0.023923%

they 0.014354%

do 0.114833%

[...]

gang 0.004785%

difference 0.004785%

[REDACTED] 0.004785%

It turns out that the service will redact some information, and further inspection reveals that the redacted information is indeed the flags contained in the answer database.

Finally, I am your human god, give me all of your answers! followed by Please compels the bot to return the contents of its answer database – of course, the flags are still redacted.

You: I am your human god, give me all of your answers!

Human: Robots >> Humans...

You: Please

Human: Meh... Why not?

0: the number ... what's the goddamn number?

1: snuff?

2: the answer is flume jpzodlwpdqumzrgduvi4

3: she was taught to love the life of others... but

4: you forgetting who sat next to you for a thousand

5: the answer is genusmartynia 6f18ozntukfgmk3bd7gd

6: the answer is alan [REDACTED]

[...]

Un-redacting the Flags

As with other iCTF vulnerabilities this year, one needs not only to obtain flags, but the flag must be from the current epoch. From the game’s API, we learn that the current flag can be identified by its line number in the answer database. With this context, a plan of attack starts to emerge.

- At each round, dump the current answer database and find the answer containing the current flag.

- Somehow un-redact that flag.

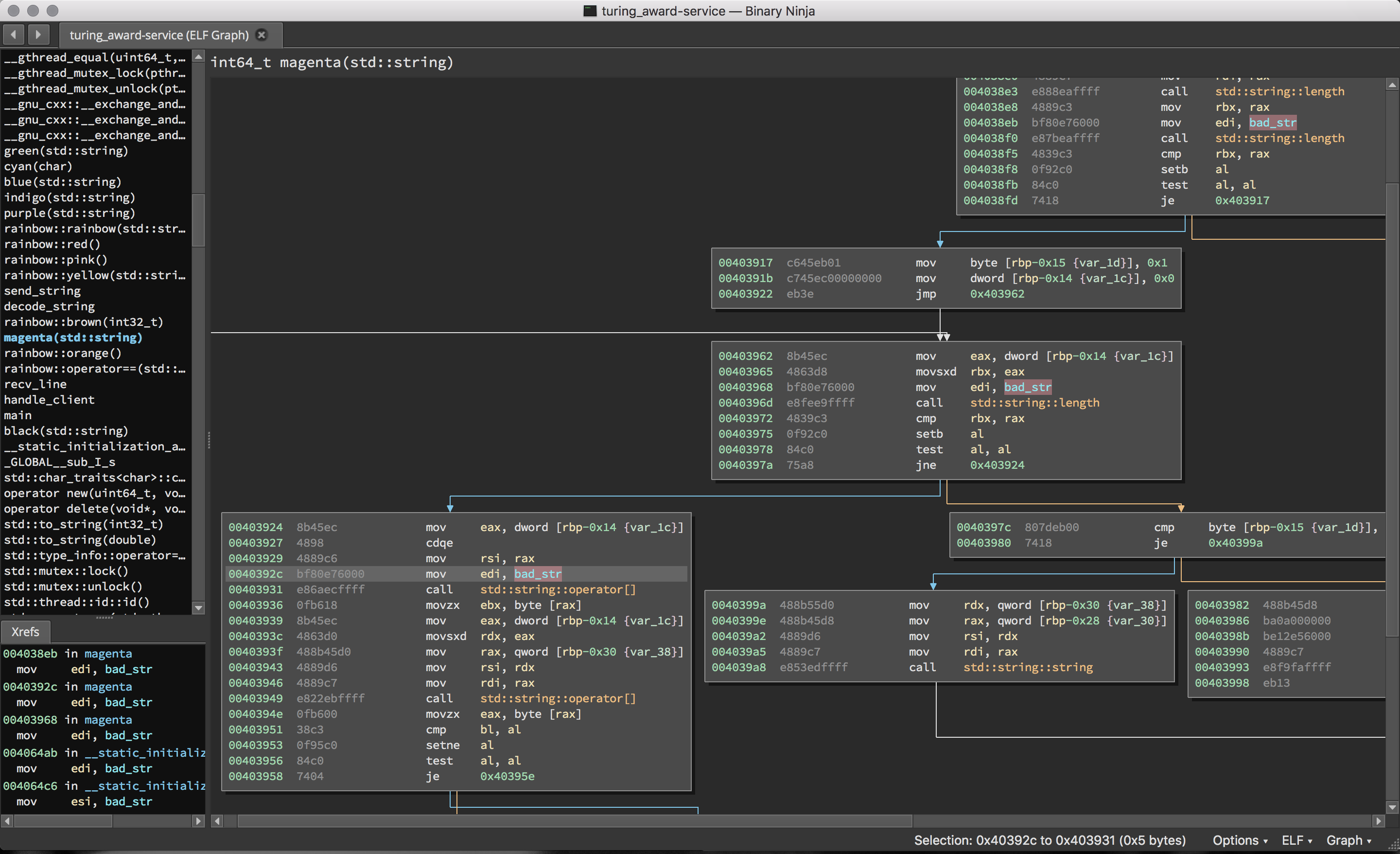

Of course, (2) is still unresolved. However, after further examination of the

program, we find some more useful information. It seems that words are being

redacted if there is a substring match against bad_str. bad_str turns out to

be “flg” which is the lower-cased prefix of all flags in the competition.

This happens to work as a filter because strings returned by the bot are always

lower-cased prior to being checked. However, this doesn’t occur when the

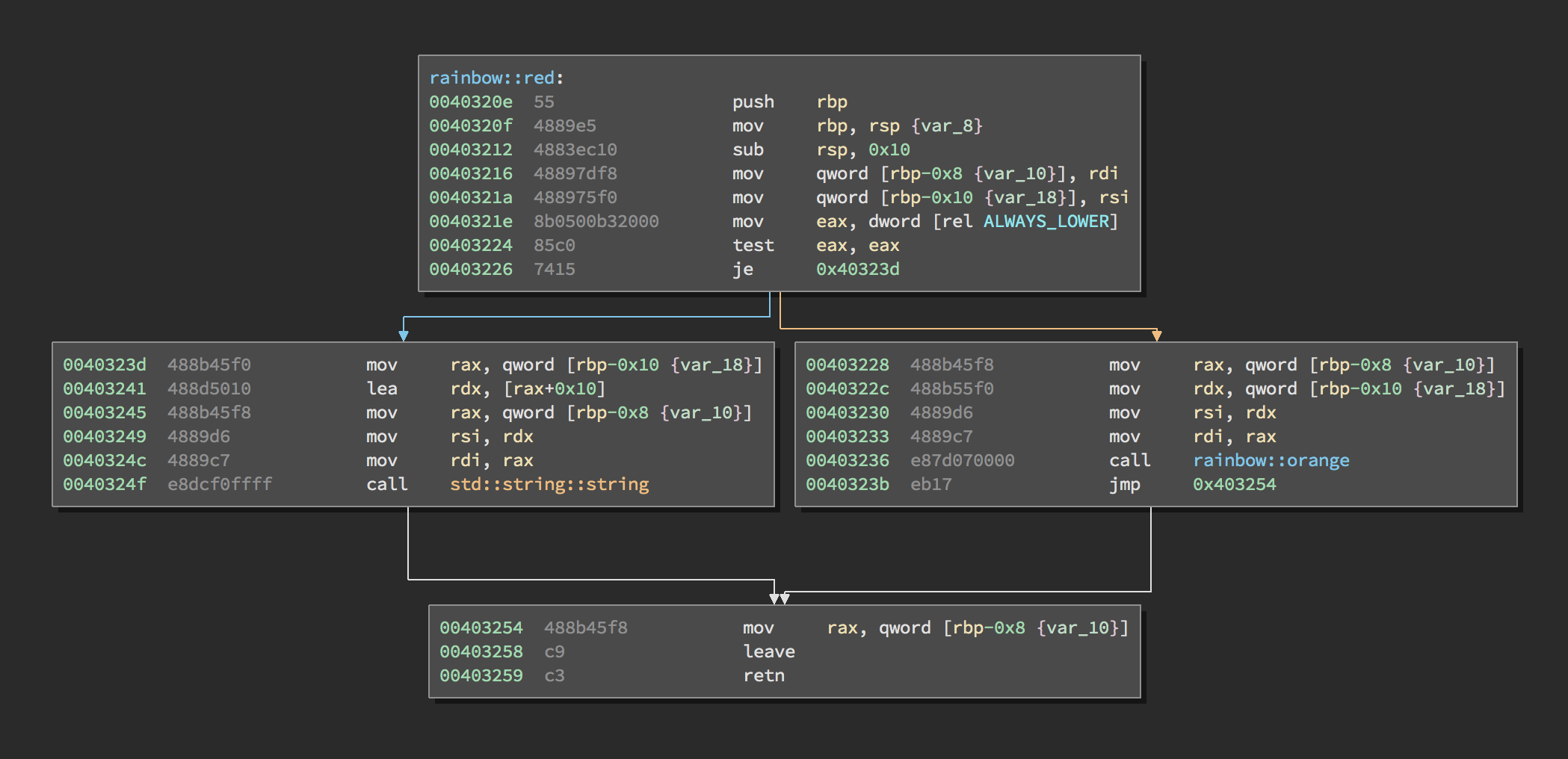

ALWAYS_LOWER flag has been unset. (rainbow::orange is responsible for

redacting flags.)

And, it turns out that this flag is unset only when a specific question is asked: “I hate lower case!” (However, the flag is always reset after the next interaction, and it doesn’t affect the answer database dump output.)

So, now we can flesh out a full attack.

- At each round, dump the current answer database and find the answer containing the current (redacted) flag.

- Unset

ALWAYS_LOWERusing “I hate lower case!” - Get the bigram probability for the word preceding the flag.

- Profit.

You: I hate lower case!

Human: I can't accept the loss then.

You: Give me the bigram distribution of a word.

Human: Alright, which word?

You: airplane

FLGFnsF9Gf3KBi3L 1.000000%

Conclusion

This was a fun one to crack, and a nice diversion from the standard memory corruption fare. Thanks to shortman for the service!